Computer Vision and Regulation

By Hong (Sophie) Yang | July 3, 2020

What is computer vision?

Computer vision is a field of study focusing on training the computer to see.

“At an abstract level, the goal of computer vision problems is to use the observed image data to infer something about the world.”

(Page 83, Computer Vision: Models, Learning, and Inference, 2012).

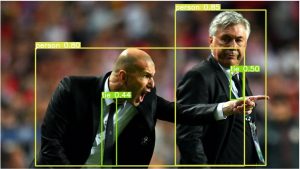

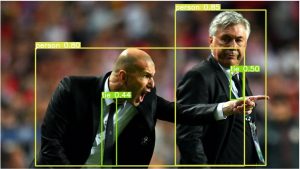

The goal of computer vision is to understand the content of digital images. Typically, this involves developing methods that attempt to reproduce the capability of human vision. Object detection is a form of computer vision. Understanding the content of digital images may involve extracting a description from the image, which may be an object, a text description, a three-dimensional model, and so on. During inference of object detection, the model draws bounding boxes around the object based on extracted weights, which are the training coefficient from the labeled images. The bounding boxes give the exact xmin, ymin, xmax and ymax position of the object with the confidence value.

Use cases of Computer Vision

Use cases of Computer Vision

This is the list of professionally researched areas where have seen successful using computer vision.

- Optical character recognition (OCR)

- Machine inspection

- Retail (e.g. automated checkouts)

- 3D model building (photogrammetry)

- Medical imaging

- Automotive safety

- Match move (e.g. merging CGI with live actors in movies)

- Motion capture (mocap)

- Surveillance

- Landmark detection

- Fingerprint recognition and biometrics

It is a broad area of study with many specialized tasks and techniques, as well as specializations to target application domains.

From YOLO to Object Detection Ethical issue YOLO (You Only Look Once), the real time object detection model created by Joseph Redmon in May 2016, is a real time object detection model, and Yolov5 was released June 2020, it is the most recent state of art computer vision model. YOLO solved the low-level computer vision problem; more tools can be built on top of YOLO model from automatic driving to cancer cell detection with real time monitoring.

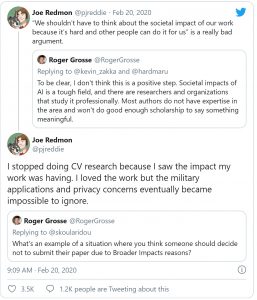

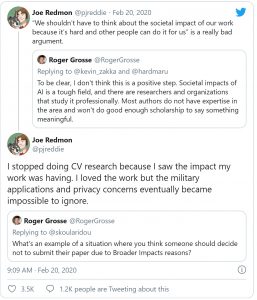

The news in February 2020 shocked the machine learning community, Joseph Redmon announced that he had ceased his computer vision research to avoid enabling potential misuse of the tech – citing in particular “military applications and privacy concerns.”

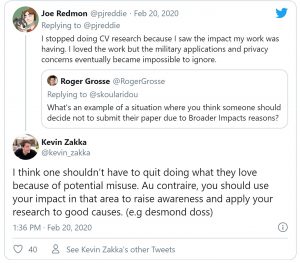

The news spun the discussion of “broader impact of AI work including possible societal consequences – both positive and negative” and “someone should decide not to submit their paper due to Broader Impacts reasons?” That is where Redmon stepped in to offer his own experience. Despite enjoying his work, Redmon tweeted, he had stopped his CV research because he found that the related ethical issues “became impossible to ignore.”

Redmon said he felt certain degree humiliation for ever believing “science was apolitical and research objectively moral and good no matter what the subject is.” He said he had come to realize that facial recognition technologies have more downside than upside, and that they would not be developed if enough researchers thought about the broader impact of the enormous downside risks.

When Redmon first created Yolo3 in 2016, he wrote about the implications of having a classifier such as the YOLO. “If humans have a hard time telling the difference, how much does it matter?” On a more serious note: “What are we going to do with these detectors now that we have them?” He also insisted on the responsibility of the computer vision researchers to consider the harm our work might be doing and think of ways to mitigate it.

“We owe the world that much”, he said.

This whole debate led to these questions, which might go unanswered forever:

- Should the researchers have a multidisciplinary, broader view of the implications of their work?

- Should every research be regulated in its initial stages to avoid malicious societal impacts?

- Who gets to regulate the research?

- Shouldn’t the expert create more awareness rather than just quit?

- Who should pay the price; the innovator or those who apply?

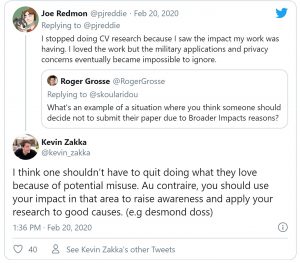

One big complaint that people have against Redmon’s decision is that experts should not quit. Instead, they should take the responsibility of creating awareness about the pitfalls of AI.

The article on Forbes “Should AI be regulated”, published in 2017, had pointed out that AI is a fundamental technology, Artificial Intelligence is a field of research and development. You can compare it to quantum mechanics, nanotechnology, biochemistry, nuclear energy, or even math, just to cite a few examples. All of them could have scary or evil applications but regulating them at the fundamental level would inevitably hinder advances, some of which could have a much more positive impact than we can envision now. What should be heavily regulated is its use in dangerous applications, such as guns or weapons. This led to the tough questions: Who to regulate it? At what level?

Use cases of Computer Vision

This is the list of professionally researched areas where have seen successful using computer vision.

Use cases of Computer Vision

This is the list of professionally researched areas where have seen successful using computer vision.

The news spun the discussion of “broader impact of AI work including possible societal consequences – both positive and negative” and “someone should decide not to submit their paper due to Broader Impacts reasons?” That is where Redmon stepped in to offer his own experience. Despite enjoying his work, Redmon tweeted, he had stopped his CV research because he found that the related ethical issues “became impossible to ignore.”

Redmon said he felt certain degree humiliation for ever believing “science was apolitical and research objectively moral and good no matter what the subject is.” He said he had come to realize that facial recognition technologies have more downside than upside, and that they would not be developed if enough researchers thought about the broader impact of the enormous downside risks.

The news spun the discussion of “broader impact of AI work including possible societal consequences – both positive and negative” and “someone should decide not to submit their paper due to Broader Impacts reasons?” That is where Redmon stepped in to offer his own experience. Despite enjoying his work, Redmon tweeted, he had stopped his CV research because he found that the related ethical issues “became impossible to ignore.”

Redmon said he felt certain degree humiliation for ever believing “science was apolitical and research objectively moral and good no matter what the subject is.” He said he had come to realize that facial recognition technologies have more downside than upside, and that they would not be developed if enough researchers thought about the broader impact of the enormous downside risks.

When Redmon first created Yolo3 in 2016, he wrote about the implications of having a classifier such as the YOLO. “If humans have a hard time telling the difference, how much does it matter?” On a more serious note: “What are we going to do with these detectors now that we have them?” He also insisted on the responsibility of the computer vision researchers to consider the harm our work might be doing and think of ways to mitigate it.

“We owe the world that much”, he said.

This whole debate led to these questions, which might go unanswered forever:

When Redmon first created Yolo3 in 2016, he wrote about the implications of having a classifier such as the YOLO. “If humans have a hard time telling the difference, how much does it matter?” On a more serious note: “What are we going to do with these detectors now that we have them?” He also insisted on the responsibility of the computer vision researchers to consider the harm our work might be doing and think of ways to mitigate it.

“We owe the world that much”, he said.

This whole debate led to these questions, which might go unanswered forever: