Discriminatory practices in interest-based advertising

By Anonymous | June 26th, 2020

Economics and ethics

The multi-billion dollar online advertising industry is incentivised to ensure that ad dollars convert into sales, or at least high click-through rates. Happy clients equate to healthy revenues. The way to realize this goal is to match the right pair of eyeballs for each ad – quality, not quantity, matters.

Interest-based ads (sometimes referred to as personalized or targeted ads) are strategically placed for specific viewers. The criteria for viewer selection could be from immutable traits like race, gender and age, or online behavioral pattern. Unfortunately, both approaches are responsible for

amplifying racial stereotypes and deepening social inequality.

Baby and the bathwater

Dark ads exclude a person or group from seeing an ad by targeting viewers based on an immutable characteristic, such as sex or race. This is not to be confused with the notion of big data exclusion, where ‘datafication unintentionally ignores or even smothers the unquantifiable, immeasurable, ineffable parts of human experience.’ Instead, dark ads refer to a deliberate act by advertisers to shut out certain communities from participating in its product or service offering.

Furthermore, a behaviorally targeted ad can act as a social label even when it contains no explicit labeling information. When consumers recognize that the marketer has made an inference about their identity in order to serve them the ad, the ad itself functions as an implied social label.

Source:

The Guardian

That said, it’s not all bad news with these personalized ads. While there are calls to simply

ban targeted advertising, one could argue for the benefits of having

public health campaigns, say, delivered in the right language to the right populace. Targeted social programs could also have better efficacy if it reaches the eyes and ears that need them. To take away this potentially powerful tool for social good is at best a lazy approach in solving the conundrum.

Regulatory oversight

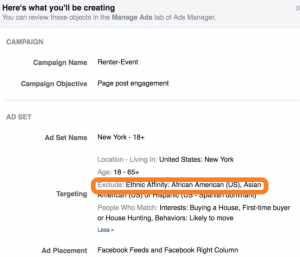

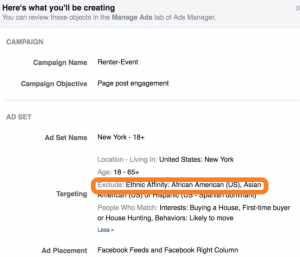

In 2018, the U.S. Department of Housing and Urban Development filed a

complaint against Facebook, alleging that the social media platform had violated the Fair Housing Act. Facebook’s ad targeting tools enabled advertisers to express unlawful preferences by suggesting discriminatory options, and Facebook effectuates the delivery of housing-related ads to certain users and not others based on those users’ actual or imputed protected traits.

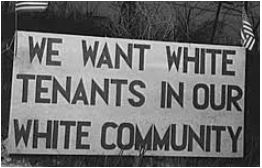

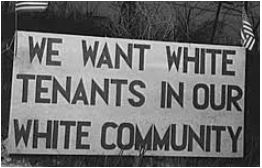

Source:

The Truman Library

A 2016 investigation by ProPublica found that Facebook advertisers could create housing ads allowing posters to exclude black people. Its privacy and public policy manager

defended the practice, underlining the importance for advertisers to have the ability to both include and exclude groups as they test how their marketing performs – nevermind that A/B testing itself often straddles the grey area in the

ethics of human subject research.

Source:

ProPublica

Opinion

Insofar as the revenues for online businesses are driven by advertising revenue, which is dictated by user traffic, interest-based ads are here to stay. Stakeholders with commercial interests will continuously defend its marketing tools with benevolent use cases. Lawmakers need to consistently address the harm itself – that the deliberate exclusions (and not just the ones from algorithmic bias and opacity) serve to exacerbate inequalities from discriminatory practices in the physical world.

In the example above, the HUD authorities did well to call out Facebook’s transgressions, which are no less serious to those of the Jim Crow era. As a society, we have moved forward with Brown v. Board of Education. Let us not slip back in complacency in justifying segregatory acts; and of being complicit in Plessy v. Ferguson.

Source: The Guardian

That said, it’s not all bad news with these personalized ads. While there are calls to simply ban targeted advertising, one could argue for the benefits of having public health campaigns, say, delivered in the right language to the right populace. Targeted social programs could also have better efficacy if it reaches the eyes and ears that need them. To take away this potentially powerful tool for social good is at best a lazy approach in solving the conundrum.

Regulatory oversight

In 2018, the U.S. Department of Housing and Urban Development filed a complaint against Facebook, alleging that the social media platform had violated the Fair Housing Act. Facebook’s ad targeting tools enabled advertisers to express unlawful preferences by suggesting discriminatory options, and Facebook effectuates the delivery of housing-related ads to certain users and not others based on those users’ actual or imputed protected traits.

Source: The Guardian

That said, it’s not all bad news with these personalized ads. While there are calls to simply ban targeted advertising, one could argue for the benefits of having public health campaigns, say, delivered in the right language to the right populace. Targeted social programs could also have better efficacy if it reaches the eyes and ears that need them. To take away this potentially powerful tool for social good is at best a lazy approach in solving the conundrum.

Regulatory oversight

In 2018, the U.S. Department of Housing and Urban Development filed a complaint against Facebook, alleging that the social media platform had violated the Fair Housing Act. Facebook’s ad targeting tools enabled advertisers to express unlawful preferences by suggesting discriminatory options, and Facebook effectuates the delivery of housing-related ads to certain users and not others based on those users’ actual or imputed protected traits.

Source: The Truman Library

A 2016 investigation by ProPublica found that Facebook advertisers could create housing ads allowing posters to exclude black people. Its privacy and public policy manager defended the practice, underlining the importance for advertisers to have the ability to both include and exclude groups as they test how their marketing performs – nevermind that A/B testing itself often straddles the grey area in the ethics of human subject research.

Source: The Truman Library

A 2016 investigation by ProPublica found that Facebook advertisers could create housing ads allowing posters to exclude black people. Its privacy and public policy manager defended the practice, underlining the importance for advertisers to have the ability to both include and exclude groups as they test how their marketing performs – nevermind that A/B testing itself often straddles the grey area in the ethics of human subject research.

Source: ProPublica

Opinion

Insofar as the revenues for online businesses are driven by advertising revenue, which is dictated by user traffic, interest-based ads are here to stay. Stakeholders with commercial interests will continuously defend its marketing tools with benevolent use cases. Lawmakers need to consistently address the harm itself – that the deliberate exclusions (and not just the ones from algorithmic bias and opacity) serve to exacerbate inequalities from discriminatory practices in the physical world.

In the example above, the HUD authorities did well to call out Facebook’s transgressions, which are no less serious to those of the Jim Crow era. As a society, we have moved forward with Brown v. Board of Education. Let us not slip back in complacency in justifying segregatory acts; and of being complicit in Plessy v. Ferguson.

Source: ProPublica

Opinion

Insofar as the revenues for online businesses are driven by advertising revenue, which is dictated by user traffic, interest-based ads are here to stay. Stakeholders with commercial interests will continuously defend its marketing tools with benevolent use cases. Lawmakers need to consistently address the harm itself – that the deliberate exclusions (and not just the ones from algorithmic bias and opacity) serve to exacerbate inequalities from discriminatory practices in the physical world.

In the example above, the HUD authorities did well to call out Facebook’s transgressions, which are no less serious to those of the Jim Crow era. As a society, we have moved forward with Brown v. Board of Education. Let us not slip back in complacency in justifying segregatory acts; and of being complicit in Plessy v. Ferguson.